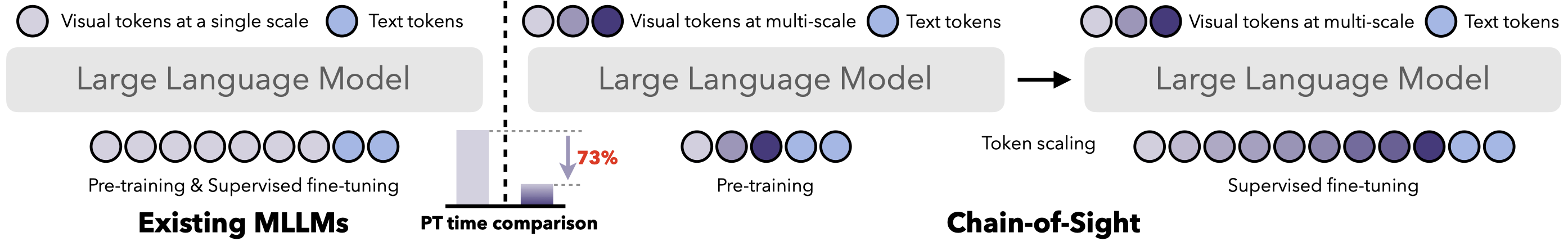

Concept overview.

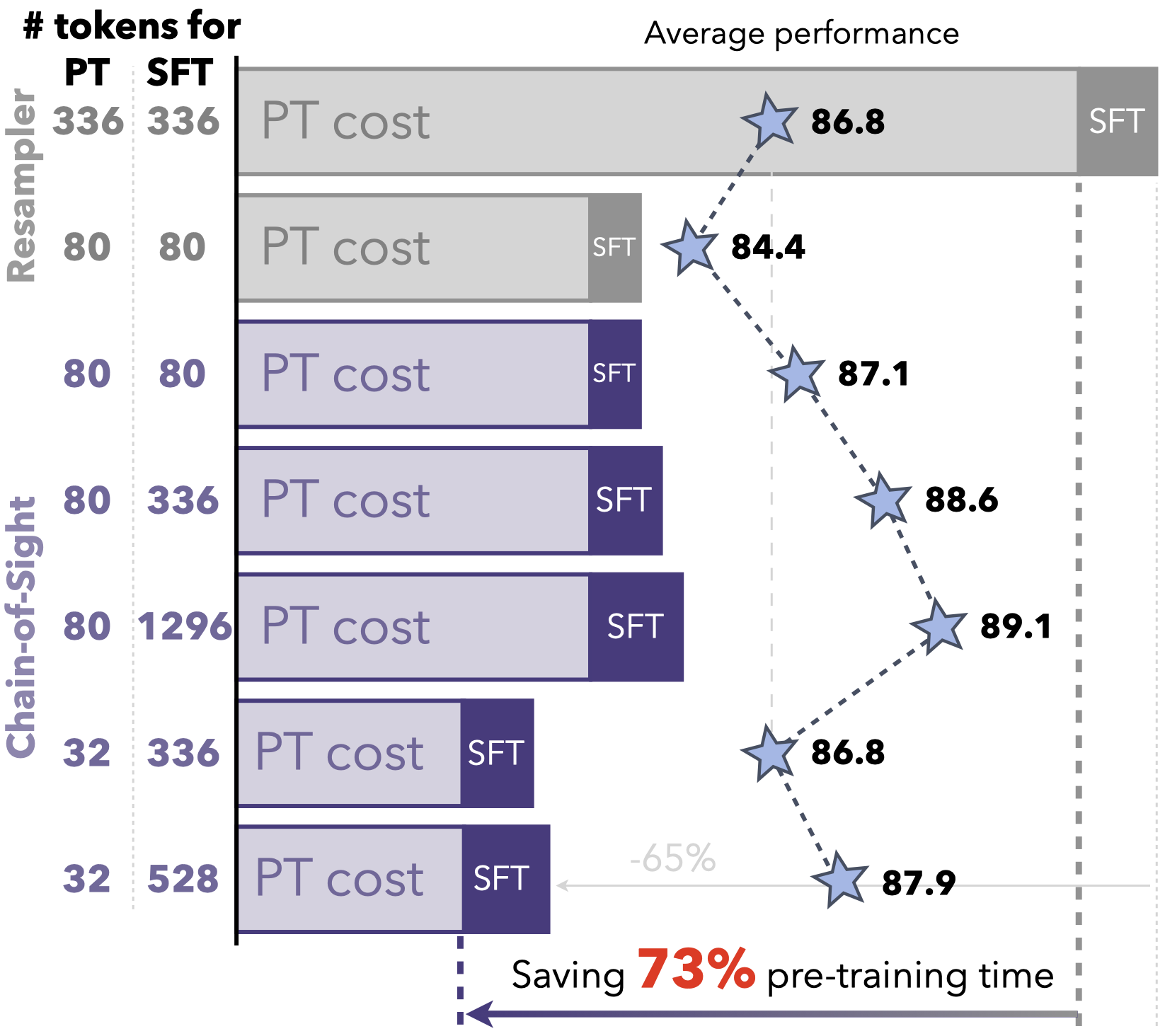

Performance overview.

This paper introduces Chain-of-Sight, a vision-language bridge module that accelerates the pre-training of Multimodal Large Language Models (MLLMs).

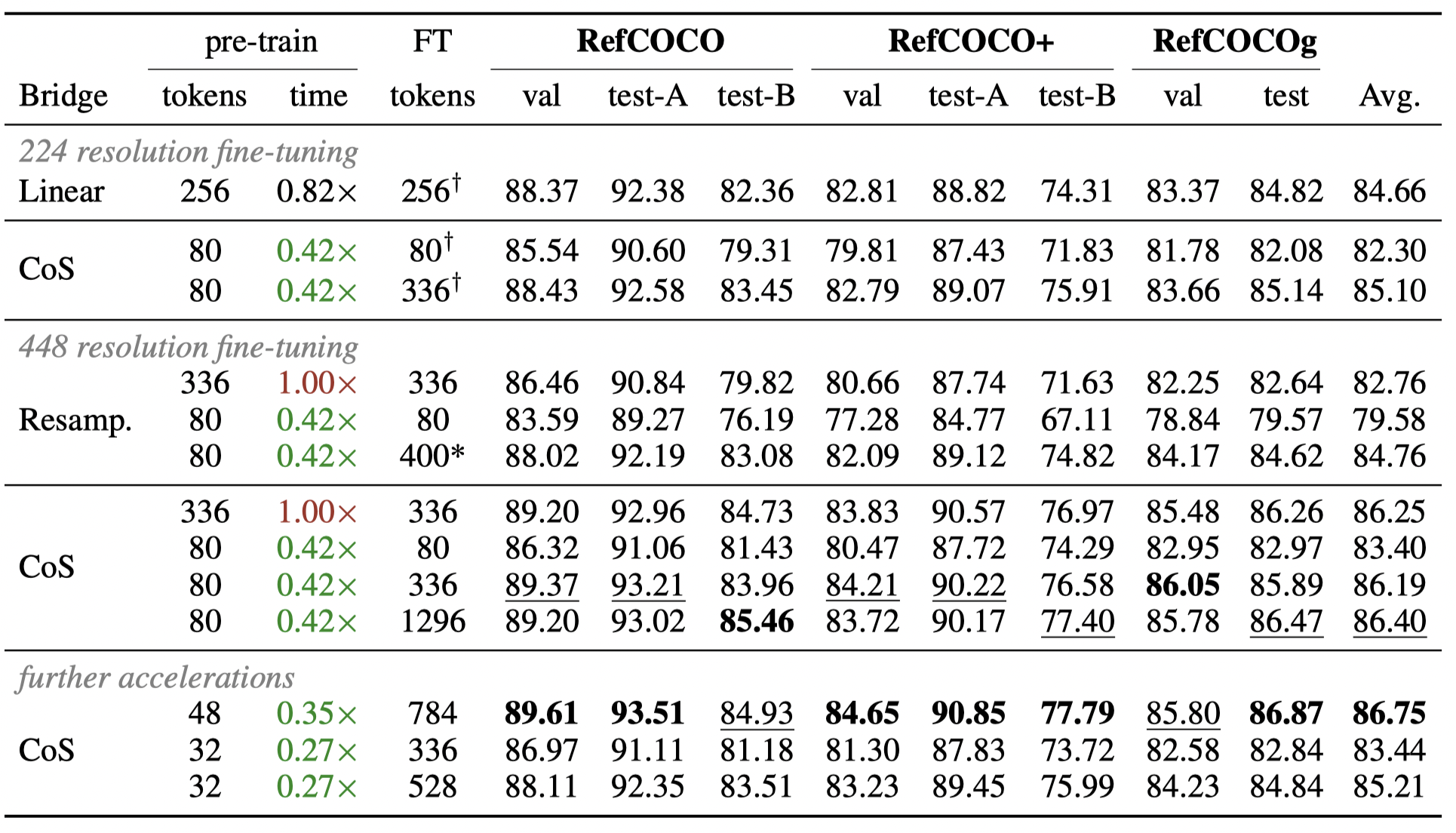

Our approach employs a sequence of visual resamplers that capture visual details at various spacial scales. This architecture not only leverages global and local visual contexts effectively, but also facilitates the flexible extension of visual tokens through a compound token scaling strategy, allowing up to a 16x increase in the token count post pre-training. Consequently, Chain-of-Sight requires significantly fewer visual tokens in the pre-training phase compared to the fine-tuning phase. This intentional reduction of visual tokens during pre-training notably accelerates the pre-training process, cutting down the wall-clock training time by ~73%.

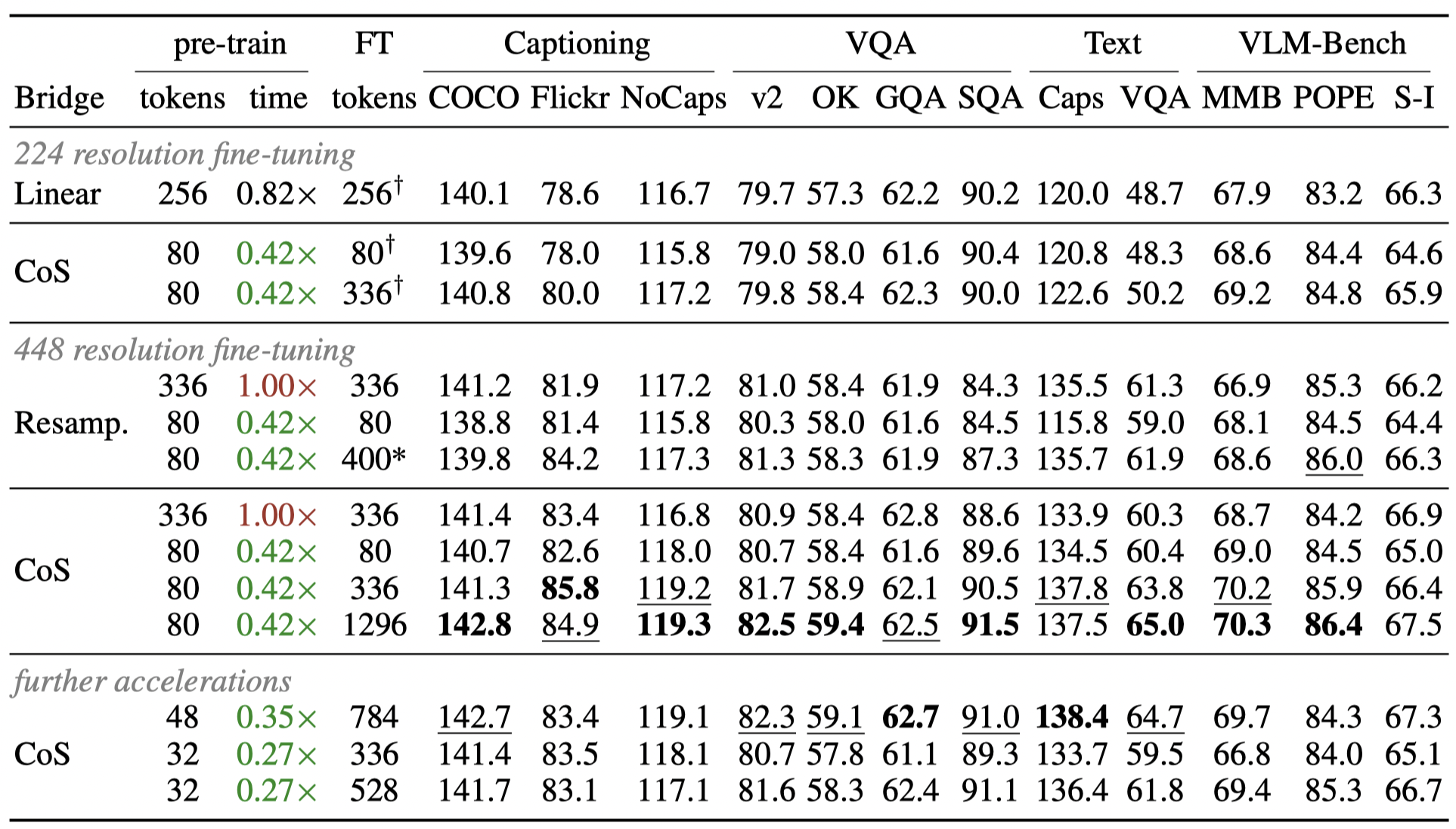

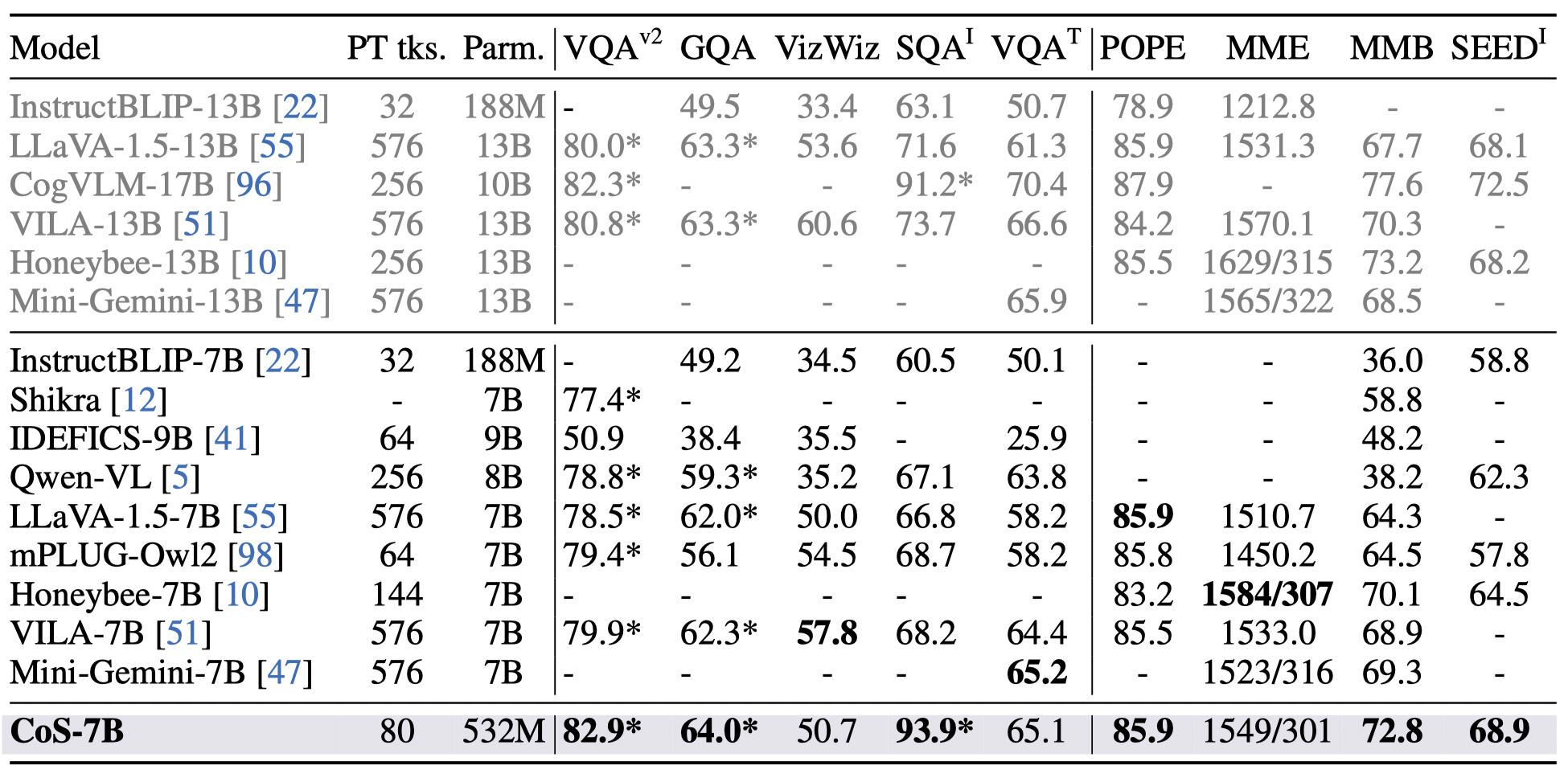

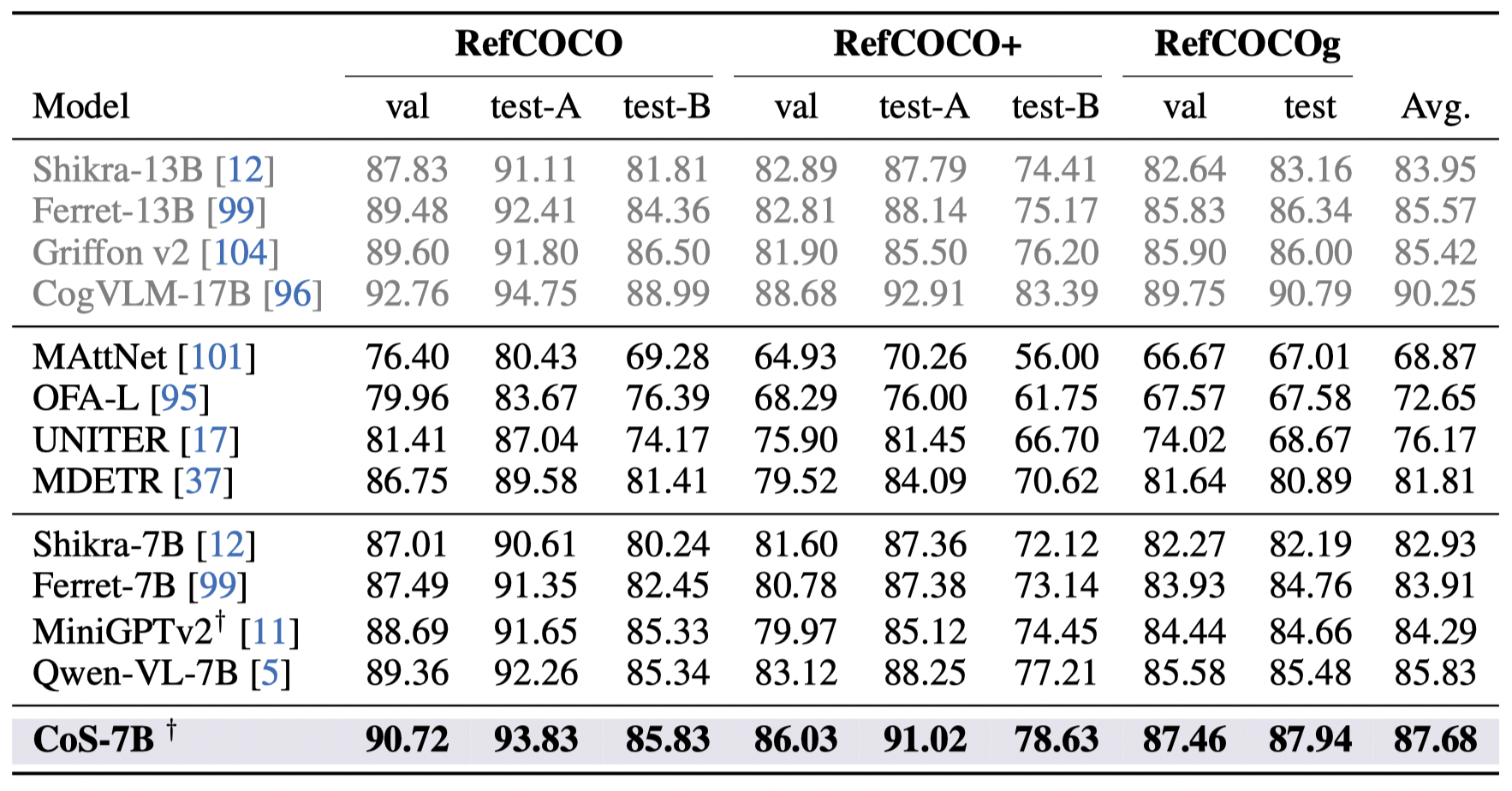

Empirical results on a series of vision-language benchmarks reveal that the pre-train acceleration through Chain-of-Sight is achieved without sacrificing performance, matching or surpassing the standard pipeline of utilizing all visual tokens throughout the entire training process. Further scaling up the number of visual tokens for pre-training leads to stronger performances, competitive to existing approaches in a series of benchmarks.

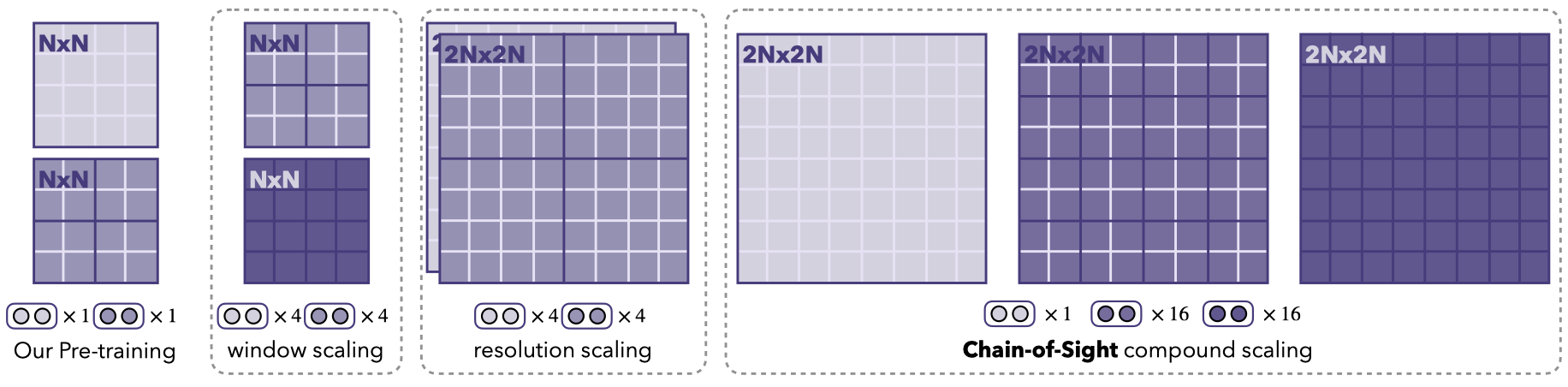

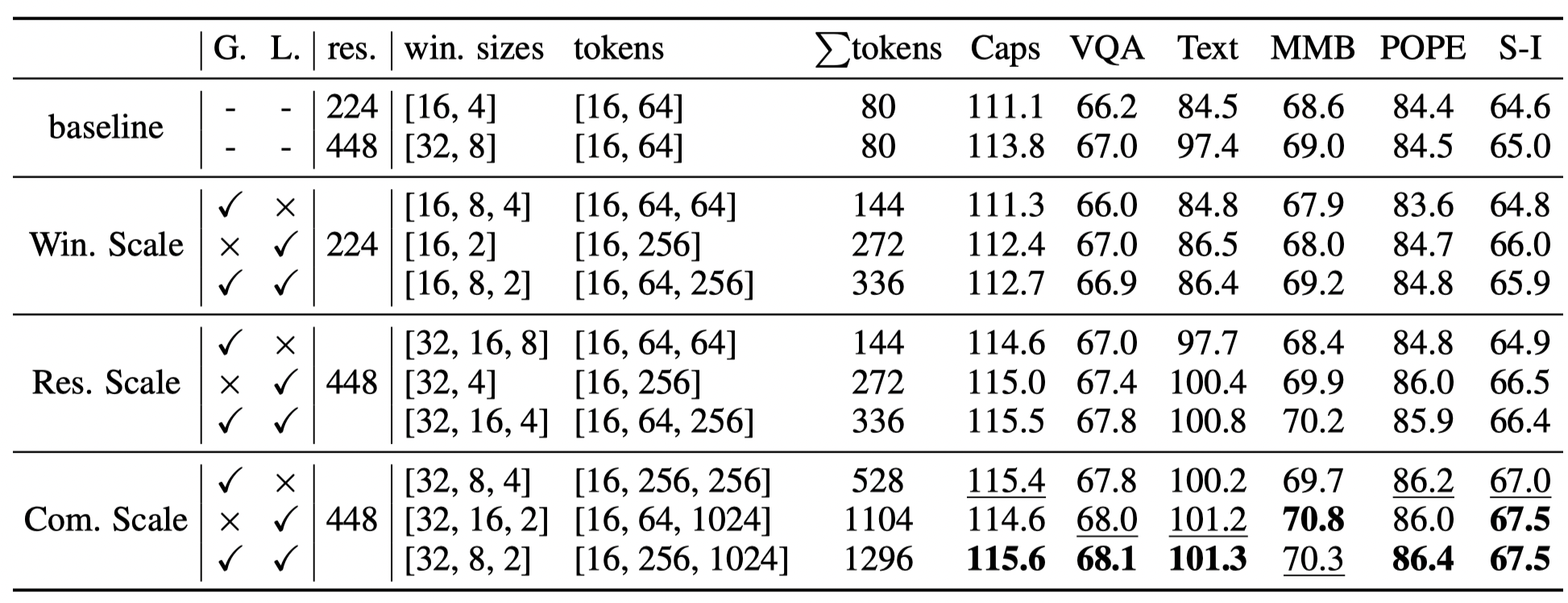

The core component of Chain-of-Sight is the multi-scale visual resampler. We partition the visual features into non-overlapping local windows of various sizes, forming features of multiple scales. At each scale level, each windowed feature is allocated with a certain number of learnable queries. These learnable queries are then utilized within the visual resampler to perform cross-attention solely on their corresponding windowed feature.

With our multi-scale visual resampler, we present compound token scaling strategy that combines token scaling with shrinking window sizes and increasing input resolution, which allows for an escalation of the token count by 16x.

Compared to linear projection & visual resampler, Chain-of-Sight enjoys faster pre-training and on-par or better performances.

With the 80 tokens used during pre-training, scaling up the number of tokens in the supervised fine-tuning stage brings significant gains on captioning, text recognition, and mitigating hallucinations.

Compared with existing approaches, CoS-7B pre-trained with less tokens and fine-tuned with substantially lower parameters achieves competitive performance, on both question answering tasks (above) and referring expression comprehension (below).

@article{huang2024accelerating,

author = {Huang, Ziyuan and Ji, Kaixiang and Gong, Biao and Qing, Zhiwu and Zhang, Qinglong and Zheng, Kecheng and Wang, Jian and Chen, Jingdong and Yang, Ming},

title = {Accelerating Pre-training of Multimodal LLMs via Chain-of-Sight},

journal = {arXiv preprint arXiv:2407.15819},

year = {2024},

}